This method, originally developed by Dantzig in 1948, has been dramatically enhanced in the last decade, using advanced methods from numerical linear algebra. LP problems are usually solved via the Simplex method.

Optimizing an indefinite quadratic function is a difficult global optimization problem, and is outside the scope of most specialized quadratic solvers. Its true minimum or maximum is not found in the "interior" of the function but on its boundaries with the constraints, where there may be many locally optimal points. An optimizer will normally find a point in the "trough" with the best objective function value.Ī QP with an indefinite Hessian has a "saddle" shape - a non-convex function. Portfolio optimization problems are usually of this type.Ī QP with a semi-definite Hessian is still convex: It has a bowl shape with a "trough" where many points have the same objective value. You can picture the graph of these functions as having a "round bowl" shape with a single bottom (or top) - a convex function. The "best" QPs have Hessians that are positive definite (in a minimization problem) or negative definite (in a maximization problem). The quadratic objective function may be convex - which makes the problem easy to solve - or non-convex, which makes it very difficult to solve. QP problems, like LP problems, have only one feasible region with "flat faces" on its surface (due to the linear constraints), but the optimal solution may be found anywhere within the region or on its surface. A widely used QP problem is the Markowitz mean-variance portfolio optimization problem, where the quadratic objective is the portfolio variance (sum of the variances and covariances of individual securities), and the linear constraints specify a lower bound for portfolio return. Where X 1, X 2 and X 3 are decision variables. Quadratic Programming (QP) ProblemsĪ quadratic programming (QP) problem has an objective which is a quadratic function of the decision variables, and constraints which are all linear functions of the variables. This means that an LP Solver needs to consider many fewer points than an NLP Solver, and it is always possible to determine (subject to the limitations of finite precision computer arithmetic) that an LP problem (i) has no feasible solution, (ii) has an unbounded objective, or (iii) has a globally optimal solution (either a single point or multiple equivalent points along a line). no curves) on its outer surface, and an optimal solution will always be found at a 'corner point' on the surface (where two or more constraints intersect).

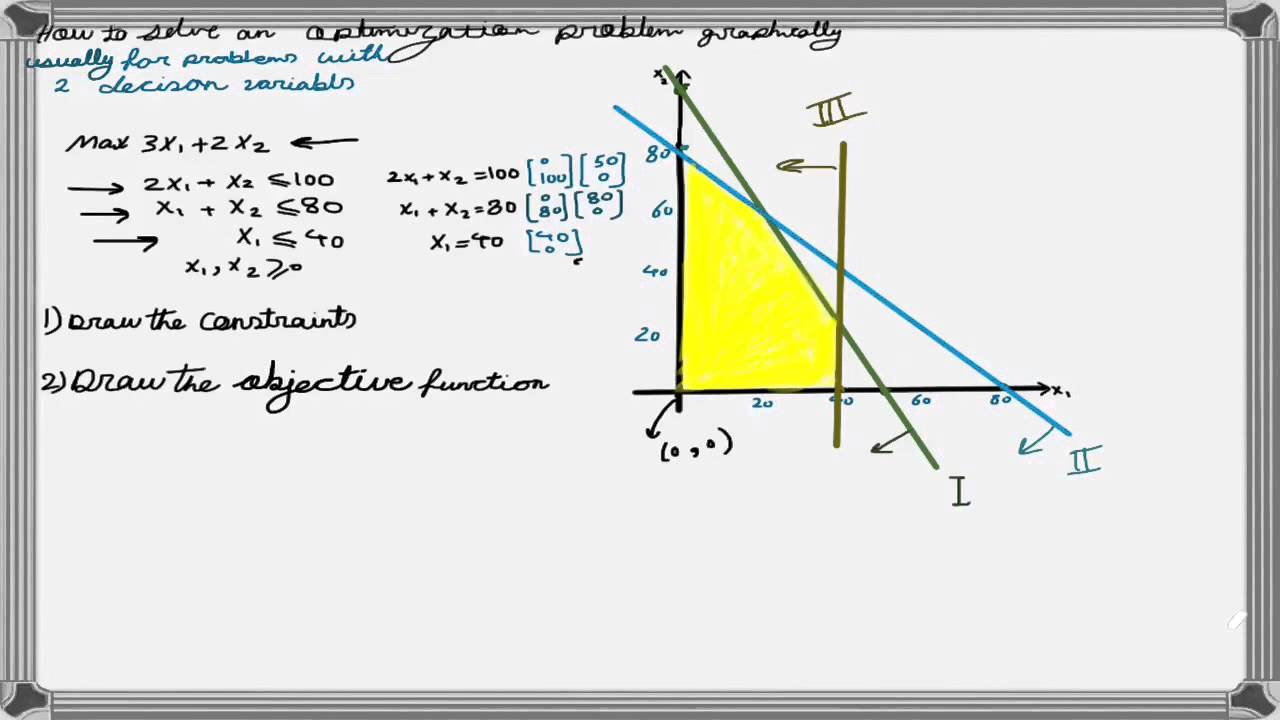

In contrast, an LP has at most one feasible region with 'flat faces' (i.e. In a non-convex NLP there may be more than one feasible region and the optimal solution might be found at any point within any such region. Since all linear functions are convex, linear programming problems are intrinsically easier to solve than general nonlinear (NLP) problems, which may be non-convex. The variables are multiplied by coefficients (75, 50 and 35 above) that are constant in the optimization problem they can be computed by your Excel worksheet or custom program, as long as they don't depend on the decision variables. where X 1, X 2 and X 3 are decision variables. A linear programming (LP) problem is one in which the objective and all of the constraints are linear functions of the decision variables.

0 kommentar(er)

0 kommentar(er)